The BYOAI wave: How shadow AI impacts your workplace

April 03 · 8 min read

Let's say you're a product manager racing with tight deadlines. Someone in your team discovers an AI tool that promises instant product and feature roadmaps. All it needs is data to process, so they feed the information required. Unfortunately, this includes proprietary market research and internal documents, confidential details that were meant for the product team alone. Meanwhile, across the hall, a developer is in a similar bind; a critical client email requires an immediate response. To ensure a polished and accurate reply, they use a generative AI tool, feeding it with snippets of the client's confidential project details. These are not isolated examples; they’re snapshots of a workplace quietly evolving through the rapid adoption of AI.

The deadlines are met, and the tasks are completed in record time—but at what cost? These AI tools were not approved by the IT department, and now sensitive data is on external servers, beyond the company’s control.

This is the paradox of shadow AI, tools that deliver efficiency and convenience but also introduce uncertainty and risk. In this article, we will explore how shadow AI is reshaping the workplace and why it is a potential liability.

Shadow IT vs shadow AI

In most organizations, employees do not have unrestricted access to all software tools. Only pre-approved, allow-listed applications are permitted; while others are either restricted or require explicit approval. However, there are gray areas, especially when employees need specific tools for short-term use. In such cases, they might bypass formal channels, procure the software on their own, and use it for official purposes. This phenomenon is shadow IT.

Shadow IT is the usage of tools and software outside of what is approved by the IT team, likely because users feel they are faster or more efficient. It might be the operations team using a free time-tracking app to stay on top of tasks. Or it could be a user sharing files via a personal mail account simply because they're already logged in. Sometimes, shadow IT emerges as a practical shortcut, like using an unapproved cloud storage service to quickly share large files with external vendors when the official file-sharing tool has size limitations.

What happens when this concept extends to artificial intelligence? This is where shadow AI comes into play—the unapproved, unsanctioned use of AI tools within organizations. With AI tools proliferating online, the risks, challenges, and benefits associated with shadow AI are becoming more pronounced. A deeper understanding is needed of its role in shaping workplace dynamics, security standards, and compliance strategies.

The primary driver of shadow AI is speed. Employees eager to enhance their productivity or solve pressing challenges turn to readily available AI tools instead of waiting for approval through formal channels. Modern platforms make this even easier by offering seamless integration or free features that quietly encourage adoption. However, restricting this behavior entirely could stifle innovation and limit the organization's adaptability.

The BYOAI wave: It’s already here (with or without you)

The stats do not lie: three out of four employees engaged in knowledge work are now actively using AI. In 2024, the adoption of generative AI surged, with usage nearly doubling. Among those who use AI, a whopping 75% opted to bring their own tools to the table. Employees are at the forefront of this revolution, making bring your own AI (BYOAI) the new normal in the workplace.

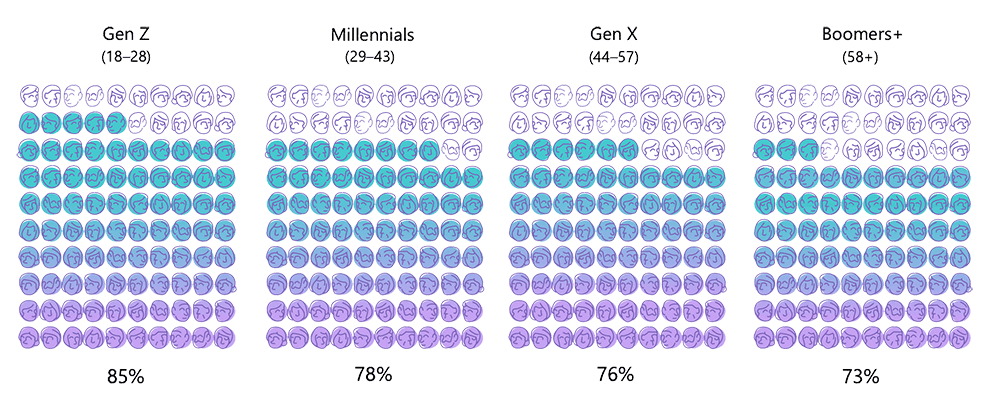

Employees across all age groups are embracing BYOAI in the workplace. Gen Z leads the way with 85% using their own AI tools, followed by Millennials at 78%, Gen X at 76%, and Baby Boomers at 73%. This widespread adoption highlights a workforce-driven shift toward AI innovation, spanning generations and reshaping workplace practices.

For larger organizations and enterprises, the challenges are more pronounced. With thousands of employees spread across different teams and departments, managing AI tool usage becomes a constant battle. Even with strict purchasing controls or endpoint restrictions, some employees find ways around them—whether by paying for software themselves or using free versions that don’t require approval.

For instance, marketing teams would often leverage emerging AI tools to enhance campaigns with personalized content and automated social media engagement, driving more targeted marketing strategies. In some cases, shadow AI has even influenced purchasing decisions—an example of this is the adoption of Microsoft Copilot.

Before AI became a standard feature in workplace tools, many organizations relied on productivity applications without AI capabilities. However, demand for AI-powered features have grown over the past few years, meaning IT teams are increasingly evaluating potential solutions—a process often slowed by legal, security, and compliance constraints. Meanwhile, employees began experimenting with AI tools independently, sometimes inadvertently exposing sensitive data to external applications.

The risks of shadow AI

AI-powered tools can be a game-changer for productivity, However, using these tools without proper checks can have serious consequences, such as: corporate licensing and compliance issues, data leaks and IP theft, inconsistent governance, and lack of centralized oversight.

Corporate licensing and compliance issues

Employees often use free or personal accounts for AI tools, not realizing they might be breaking company policies or licensing rules. For example, a designer might use their personal account on an AI design tool to create graphics for a campaign. If the tool’s license doesn’t allow commercial use, the company could face fines or legal repercussions. Moreover, if audits reveal widespread use of unapproved tools, the company’s reputation could take a hit. Clear communication about approved tools and proper licensing is essential to avoid such risks.

Data leaks and IP theft

Many AI tools need data to work, and employees might unknowingly share sensitive information. A developer might upload pieces of proprietary code to an AI debugging tool or use a transcription service for a confidential meeting recording. If these tools store or share the data without consent or proper protocol, it could lead to leaks.

Generative AI, in particular, poses the added risk of producing misinformation or exposing proprietary information if prompts are not carefully crafted. Even well-meaning employees might inadvertently introduce security gaps by pasting sensitive company data into a public AI model, making shadow AI a serious concern for organizations.

Consider a company that discovers an employee’s use of an AI chatbot had made sensitive financial data publicly accessible because the tool did not have proper security measures. As a result, the company faced a significant data breach, leading to reputational damage, legal scrutiny, and potential financial penalties. To prevent this, companies need to evaluate tools carefully before employees use them.

Inconsistent governance

Without clear rules, employees may pick tools that do not align with company standards. This includes tools that lack strong encryption and those hosted in regions with weak data protection laws. These mismatched tools create security gaps and inefficiencies. In industries like healthcare or finance, this can also mean failing to meet critical regulations like GDPR or HIPAA. A consistent policy on approved AI tools can help companies avoid such risks.

Lack of centralized oversight

Shadow AI often operates outside the radar of IT teams, making it hard to monitor which tools are being used or how secure they are. A sales team might adopt an AI platform to personalize client pitches. If IT isn’t involved, there’s no way to ensure the platform handles data securely. This lack of oversight can lead to duplicated efforts and wasted resources. Centralizing AI oversight ensures tools are secure, effective, and aligned with company goals.

Strategies to effectively manage shadow AI risks

While shadow AI has its benefits, governance mechanisms must be in place to mitigate its risks. Organizations can adopt the following strategies:

1. AI policy development

A comprehensive AI policy serves as a blueprint for how tools are used within an organization. Ramprakash Ramamoorthy, director of AI research at Zoho and ManageEngine, emphasizes that an AI usage policy should balance innovation and governance, providing clear guidelines without unnecessary restrictions that hinder productivity. The policy must address data security, privacy, and compliance, specifying the types of data AI tools can process and the necessary safeguards. Clearly defining usage boundaries is essential, particularly in decision-making for sensitive areas like hiring, finance, or legal matters.

Accountability is another key aspect, with designated ownership ensuring transparency and addressing potential ethical concerns such as bias and misinformation. A structured approval process for adopting new AI tools, along with a mechanism for employees to report problems or suggest improvements, helps maintain responsible AI use within the organization.

This policy should outline:

- Approved AI tools and their appropriate usage.

- Evaluation metrics for approving new tools based on team needs and productivity impact.

- Clear processes for testing and integrating offline AI models to reduce data leakage risks.

Key stakeholders—including IT, legal, compliance, and senior management—must collaborate to create a policy that aligns with both operational needs and security standards. For instance, a policy might specify that customer service teams use only pre-approved AI tools for drafting emails, with strict restrictions on uploading sensitive client data. Regular training on the policy ensures employees stay informed and compliant, while periodic reviews keep the guidelines relevant in a rapidly evolving AI landscape.

2. User awareness

Employees need to be educated about the risks of shadow AI. Many employees do not realize that using unauthorized tools can expose sensitive data or lead to compliance issues. Programs should focus on real-world scenarios, like uploading internal documents to an AI tool or using one to draft emails with confidential client details.

These sessions should go beyond lectures. Interactive training, real-life case studies, and role-specific examples can help employees understand the fine line between productivity and risk. The goal is to help employees understand the risks and make smarter choices when using AI tools without driving them away from innovation.

3. Endpoint protection

Strong endpoint protection ensures that all devices—whether in the office, at home, or on the go—are shielded from unapproved software. Modern endpoint tools are designed to detect and restrict unauthorized applications, flagging risky actions like large data uploads to external servers. This level of monitoring enables IT teams to proactively identify and respond to potential threats. With a growing remote and hybrid workforce, endpoint protection must extend to personal devices.

4. Controlled AI access

Innovation thrives when employees have room to experiment. However, unrestricted access to AI tools can lead to chaos. Controlled access offers a middle ground. Employees can test AI tools within a defined framework, using only non-sensitive or anonymized data during trials. For instance, a product team exploring a new AI tool for generating user insights could be granted time-limited access. Usage would be monitored to ensure compliance with security standards. Once the trial period ends, feedback can determine whether the tool is approved for broader use. This method not only reduces unauthorized experimentation but also ensures teams feel supported in their need to innovate.

Tracking and monitoring AI tool usage within an organization requires a combination of technical controls and employee awareness. Network monitoring tools, cloud access security brokers (CASBs), and endpoint security solutions can help detect unauthorized AI usage by flagging access to unapproved platforms.

5. Evaluation process for AI tool approval

Determining which AI tools are approved or restricted requires a structured evaluation process that prioritizes security, reliability, and business relevance. Organizations should assess AI tools based on factors such as data handling practices, integration capabilities, vendor transparency, and adherence to regulatory requirements. IT and security teams must also consider whether an AI tool introduces vulnerabilities, particularly if it relies on external APIs or cloud-based models that process sensitive data. Beyond technical assessment, organizations should evaluate AI tools based on their real-world impact—whether they enhance productivity without introducing undue risks. A governance framework should be in place to periodically reassess approved tools, ensuring they remain compliant as AI regulations and capabilities evolve.

6. Data security

Companies can opt for AI tools that can operate within their own infrastructure rather than relying on external servers. This approach is referred to as localized AI. These localized AI solutions process data internally, on company-owned servers or devices, offering a secure environment for AI-driven tasks.

Offline AI models go further by processing data locally without needing an internet connection, ensuring no information leaves the organization. This is ideal for tasks like document analysis or reporting in industries handling sensitive data or bound by strict privacy laws like GDPR or HIPAA. These tools offer the same benefits as cloud-based options but without the risks. They are especially useful for industries that handle highly sensitive data or need to follow strict privacy laws.

7. Metrics and user feedback

Before adopting any AI tool, organizations should evaluate its relevance and productivity benefits. By actively seeking user suggestions and feedback, businesses can align their tool selection with team needs. For instance, during the evaluation of Microsoft Copilot, teams provided input on features that enhanced their workflows, leading to a more informed purchasing decision.

Final words

Shadow AI, much like shadow IT, is both a challenge and an opportunity. While it opens doors to quick innovation, it must be managed with effective governance, awareness programs, and the right tools to mitigate risks. The key lies in striking the right balance—giving users enough freedom to experiment while ensuring the organization’s security and compliance are not compromised.

Shadow AI isn’t a sign of rebellion; it’s a signal that employees are looking for better ways to get their work done. The answer isn’t just locking down unauthorized tools but understanding why they are being used and finding ways to meet those needs without sacrificing security or governance.