According to Gartner, AI Trust, Risk, and Security Management (AI TRiSM) is a framework that delivers "AI model governance, trustworthiness, fairness, reliability, robustness, efficacy and data protection." AI TRiSM enables the preemptive identification and mitigation of:

With AI TRiSM, organizations can contextually respond to the rapidly expanding AI-enabled attack surface by leveraging a consolidated stack of tools. Besides, AI TRiSM can uphlold several ethical principles pertaining to AI innovation and usage.

Also read: How are countries dealing with AI regulation - An overview

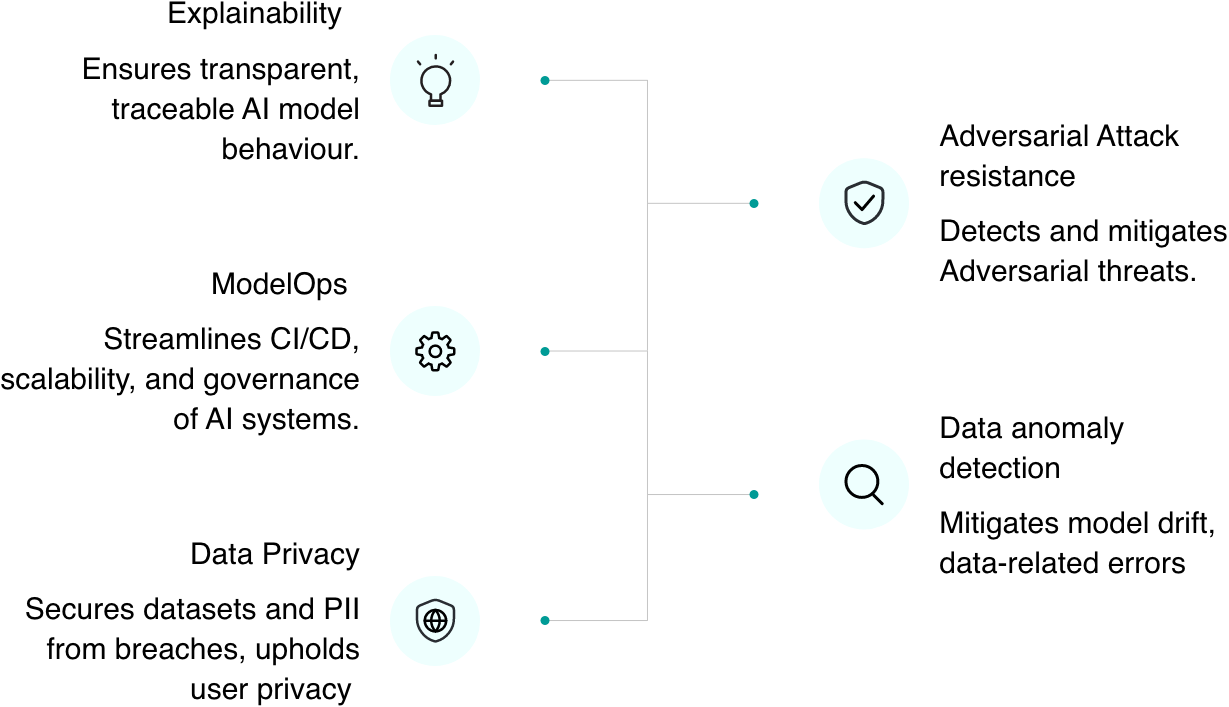

AI TRiSM is the combined deployment of five components:

AI TRiSM framework

This refers to the processes that make AI systems, their inputs, outcomes, and mechanisms, unambiguous and understandable to human users. By discarding the black box-like nature of traditional AI systems, Explainable AI's (XAI's) traceability helps stakeholders identify capability gaps and improve its performance. Additionally, XAI reassures users of its reliability and receptiveness to course correction.

Similar to DevOps, ModelOps refers to the tools and processes that constitute the software development lifecycle of an AI-powered solution. According to Gartner, ModelOps' primary focus lies "on the governance and life cycle management of a wide range of operationalized artificial intelligence (AI) and decision models, including machine learning, knowledge graphs, rules, optimization, linguistic and agent-based models." The components of ModelOps include:

With ModelOps, AI development becomes scalable by design, and ensures that AI solutions perpetually scale up and undergo holistic betterment.

Adversarial attacks manipulate AI and other deep learning to deliver rogue outcomes as bad actors embed erroneous data (known as adversarial samples) into input data sets. These malicious input are indistinguishable from existing data sets, and makes AI systems susceptible to cyberattacks. AI TRiSM hardens AI from adversarial attacks by introducing multiple process, some of which include:

Data is the backbone of an AI program's developmental journey, as it draws its generative and other critical capabilities by analyzing an exhaustive collection of data sets. Compromise of AI data can result in anomalous, inaccurate, and potentially risky outcomes, such as biased results. Data anomaly detection keeps the integrity of AI systems intact by:

Much like how data accuracy is crucial to AI, data privacy weighs equal importance, considering its direct impact on users. Fortifying data by applying application security controls,and accounting users' consent in the data processing journey form this integral component of AI TRiSM.

AI TRiSM has extensive applications for organizations that deploy and/or develop enterprise AI. Environments implementing AI TRiSM can avoid false positives and inaccuracies generated by their AI deployments, while making them reliable and compliant to data privacy regulations.

Several proposed AI regulatory frameworks, such as the European Union's AI Act and the NIST's AI Risk Management Framework, emphasize the need for trustworthiness in AI design. With data and transparency of processes being the core functionalities that inform trustworthiness, AI TRiSM's data protection and explainability capabilities ensure that the AI initiatives of organizations are reliable.

To demonstrate a real-life example on how AI TRiSM capabilities helps build trustworthy AI, let's take the case of the Danish Business Authority (DBA). This organization created a protocol for developing and deploying ethical AI systems which involved two key processes:

By leveraging the framework, the DBA was able to develop, deploy, and manage 16 AI tools that oversee high-volume financial transactions worth billions of Euros.

For organizations that are considering implementing AI TRiSM, or any other watchdog framework that governs AI assets, it is imperative to lay down the necessary groundwork to facilitate seamless implementation and functioning.

Educating employees on the core technologies of AI TRiSM and bridging the gap of skills plays a crucial role in enhancing human participation, which determines the functioning and optimization of the framework. Effective collaboration between disparate teams holds the key to the seamless transfer of knowledge between employees vis-à-vis expediting employee education. Post training, organizations should form a task force to manage their AI operations.

Any expansive architecture starts its journey from a unified standard that defines:

Having comprehensible documentation in place helps organizational leaders educate key stakeholders and employees on the AI TRiSM framework and its applications. Besides, documentation standardizes essential processes. To make documentation and governance of AI TRiSM more dynamic, it is important for organizations to collaborate with subject matter experts and policymakers belonging to diverse fields. For instance, to build an AI TRiSM that is efficient in detecting social bias, the organization should have legal and social justice experts on board.

Business leaders and C-level executives overseeing the AI journeys within their respective organizations should mandate the necessary toolkits and infrastructure that favors XAI. Some of the important XAI processes include:

Organizations looking to deploy AI should minimize their attack surface by deploying foolproof security practices and frameworks within their network. To avoid the over-exposure of AI systems and data sets, organizations should implement the Zero Trust architecture, Secure Access Service Edge, and other security programs that emphasize microsegmentation of critical resources, continuous evaluation and monitoring of assets, and contextual authentication and authorization of users.

Gartner's AI TRiSM Market Guide predicts that:

The guide also charted a five-point market roadmap of the AI TRiSM architecture.

Organizational leaders involved in building the AI TRiSM framework will collaborate with ''data scientists, AI developers, security leaders, and business stakeholders'' to ensure they understand the significance of AI TRiSM tools and can be contextually applied to the security design of the framework.

This refers to the overlapping capabilities present within AI TRiSM. Features including ModelOps, explainability, model monitoring, continuous model testing, privacy functions (including privacy-enhancing technologies) and AI application security overlap with each other.

With the proliferation of AI TRiSM tools, ModelOps vendors are projected to widen their capabilities to accommodate the entire AI model lifecycle.

As organizations start adopting AI TRiSM tools, Gartner expects the AI TRiSM market to consolidate around two of its key capabilities: ModelOps and privacy functions.

Gartner predicts the introduction of AI-Augmented TRiSM, which orchestrates AI regulation with human oversight.