Bringing Al to your business? LLM observability makes sure it's done right

Summary

Large Language Models (LLMs) are transforming industries—from automating support to generating content and insights. But as these models grow in complexity, so does the need to monitor and understand their behavior in real time. That’s where LLM observability comes in. It goes beyond output tracking to offer deep visibility into how models process inputs, generate responses, and use resources.

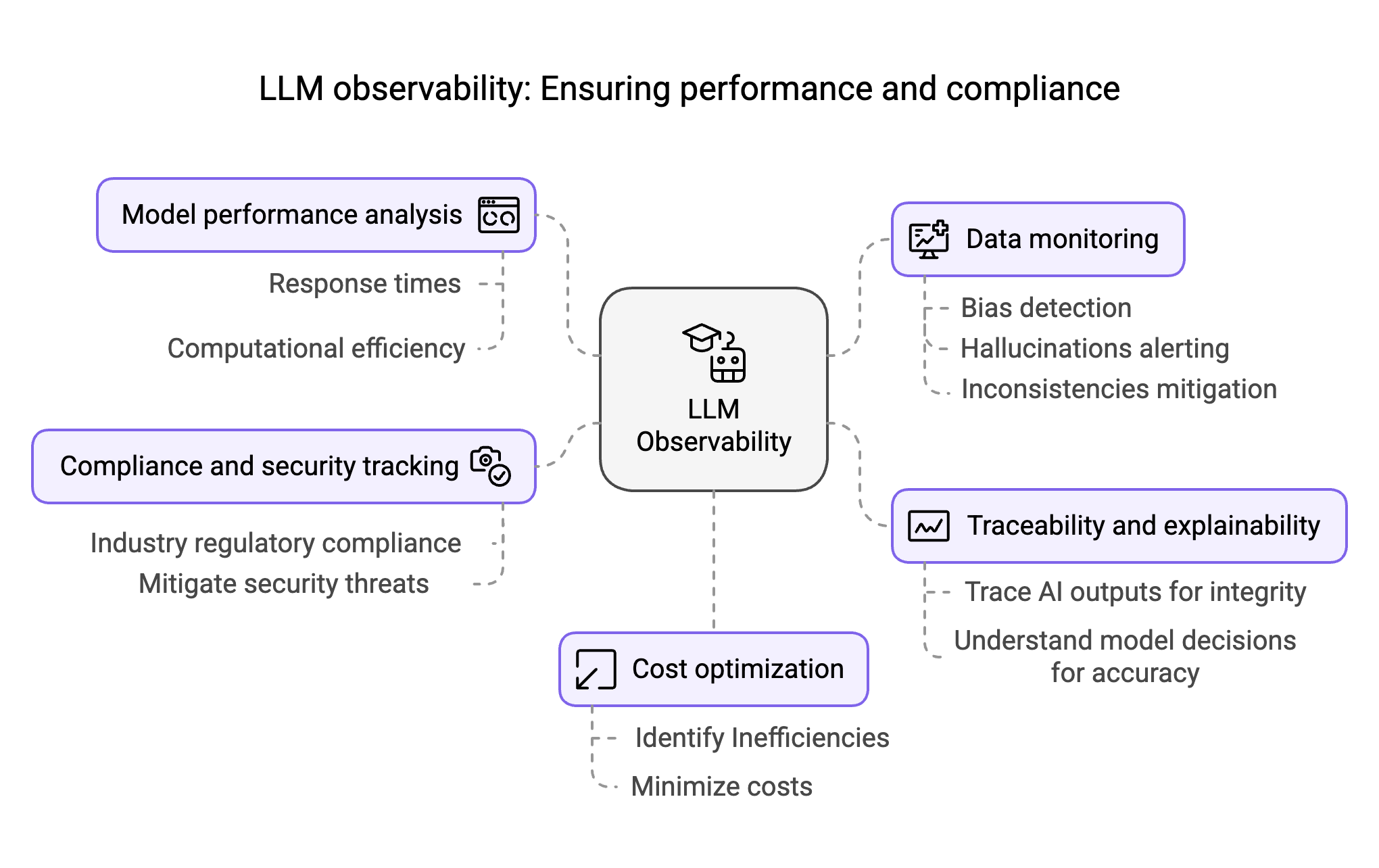

With observability, teams can detect bias, hallucinations, and inconsistencies, monitor performance, ensure compliance, and reduce infrastructure costs. It also enables explainability and traceability—critical for trust and regulatory needs. Whether you're optimizing customer experiences or safeguarding sensitive data, observability ensures your AI performs reliably, securely, and cost-effectively.

Curious how it all works—and what to look for in a good LLM observability tool? Check out the full article

Large language models (LLMs) have become a cornerstone of Al-driven innovation, reshaping industries like healthcare, finance, education, and more. From improving customer service with chatbots to enhancing content creation and automating complex decision-making processes, the potential of LLMs seems limitless.

When we think about the vast complexity of LLMs, it becomes clear that it is essential to have real-time understanding of their behavior. These models operate in an intricate web of data inputs, training protocols, algorithmic nuances, and predictive outputs, making it difficult to pinpoint where things may go wrong.

How do we tackle this? Enter, Al and LLM observability.

What is LLM observability?

At its core, LLM observability is the practice of monitoring, analyzing, and optimizing the behavior of large Al models in real time. Observability goes beyond simply measuring the output of a system; it provides deep insights into how and why LLMs produce specific responses-allowing organizations to monitor Al and LLM models at every step of their processing, from input ingestion to output generation. This understanding helps Al teams ensure that models are functioning as expected, detecting problems early, and making adjustments as needed. The goal is to improve the overall user experience and meet the stringent demands of business performance, reliability, and regulatory compliance.

For instance, in a customer service application using an LLM, observability can help track whether the Al-generated responses are contextually relevant, adhere to the desired tone, and follow predefined guidelines. This kind of monitoring ensures that businesses can spot issues like drifts in language, unexpected errors, or bias in model responses, allowing teams to intervene quickly and prevent negative outcomes.

How does Al and LLM observability work?

LLM observability involves collecting, analyzing, and visualizing key performance metrics across different layers of your Al stack. It allows teams to monitor, analyze, and optimize the functioning of Al models in real time, providing deep insights into their behavior. But how exactly does it work? Here's a breakdown of the various components.

Data monitoring to avoid biases, hallucinations, and inconsistencies

Data monitoring is crucial for ensuring that LLMs generate outputs that align with ethical guidelines and business objectives. It tracks both input and output data to enable:

- Bias detection: LLMs can inherit biases from the data they were trained on, which can reflect as inappropriate outputs. Observability systems continuously check outputs against threshold criteria to flag these potential issues.

- Hallucinations alerting: When LLMs generate information not grounded in reality-such as incorrect facts or fabricated details-data monitoring can detect these "hallucinations" and alert teams to intervene.

- Inconsistencies mitigation: Observability tracks whether the model produces reliable and coherent outputs consistently. In cases where the model's behavior deviates from expected results, monitoring tools raise alerts.

These insights allow for corrective actions that ensure the model adheres to accuracy, fairness, and business standards.

Model performance analysis for ensuring efficiency

Performance analysis is an essential part of LLM observability, focused on key metrics such as:

- Response times: For real-time applications like customer service bots or personal assistants, low response times are crucial for a good user experience. LLM observability helps with identifying any slowdowns or performance bottlenecks.

- Computational efficiency: LLMs require significant computational resources. Al observability tools monitor CPU, memory, and GPU usage to ensure that the system is using resources efficiently. Identifying spikes or inefficiencies in resource usage helps optimize the deployment of models, preventing unnecessary resource consumption and potential slowdowns.

By analyzing these metrics, LLM observability tools help teams optimize model performance, enhancing both speed and accuracy while reducing operational overhead.

Traceability and explainability for integrity and accuracy

Traceability and explainability are fundamental for improving trust and transparency in Al models. LLM observability systems enables:

- Traceability: To track the entire data pipeline from input to output. If a model generates a problematic or unexpected response, the source of the issue can be traced back to the specific data point or processing stage that caused it. This audit trail is particularly valuable for debugging, as well as for verifying the integrity of model decisions in regulated industries.

- Explainability: This provides insights into why the model made a specific decision. By breaking down the internal model's decision-making process, observability helps stakeholders understand and validate the reasoning behind each output, ensuring that the model is not just accurate, but also aligned with ethical and regulatory standards.

In industries like healthcare and finance, explainability is critical to ensure that Al decisions can be justified to both regulators and end users.

Compliance and security tracking

LLM observability ensures that Al systems comply with industry regulations, such as GDPR or HIPAA. It also monitors the security of the model, protecting sensitive data and ensuring privacy requirements are met.

By continuously tracking compliance and security, Al and LLM observability helps mitigate risks associated with legal non-compliance or data breaches, thus protecting both the organization and its users.

Cost optimization through better resource optimization

LLMs can be resource-intensive, especially when deployed at scale. Observability plays a key role in identifying inefficiencies that lead to unnecessary costs. By identifying inefficiencies in model execution, observability helps reduce unnecessary computational expenses. This ensures that Al systems run efficiently, minimizing costs while maintaining performance.

For instance, LLM observability helps identify how models behave under different loads such as during periods of high traffic. Based on these insights, organizations can scale infrastructure appropriately-either by adding resources to handle peaks or by optimizing the model to operate efficiently within existing capacity.

What are the benefits of LLM observability?

Implementing LLM observability solutions brings significant advantages to Al-driven businesses:

- Improved accuracy and reliability

Continuous monitoring of model outputs allows teams to detect and correct errors, hallucinations, or inconsistencies in real time. For example, an LLM might provide incorrect troubleshooting advice or a false alert, causing unnecessary actions or delays. With observability tools, businesses can track more outputs in real time, spot such issues, and refine the model to ensure more reliable, accurate results. This reduces the chances of hallucinations and ensures that the model aligns with business objectives, especially when deployed in mission-critical applications like incident management or system monitoring. - Enhanced compliance and security

LLM observability helps businesses track Al-generated outputs to ensure they comply with regulations like GDPR, HIPAA, or SOC 2. For instance, in industries like healthcare—where privacy is critical—observability tools can flag outputs that inadvertently expose sensitive data, preventing potential violations and security breaches. - Cost reduction

By optimizing token usage, reducing model drift, and improving infrastructure efficiency, maintenance can lower operations costs. For example, observability tools can track resource consumption and identify areas where the model might be consuming more computational resources than necessary. This enables cost-saving optimizations, such as reducing excessive token usage or fine-tuning the model to run more efficiently. - Better user trust and experience

LLM observability ensures that Al outputs are explainable, unbiased, and aligned with user expectations. For example, in customer support, observability tools ensure that Al responses are not only accurate but also transparent and fair, which builds trust with users. - Faster troubleshooting and debugging

Real-time issue detection enables quick identification and resolution of errors, reducing downtime. If a model starts to produce inaccurate or flawed outputs—such as incorrect configurations or suggestions—observability tools allow teams to quickly pinpoint the issue and address it before it disrupts operations, making real-time issue detection especially valuable in fast-paced environments like IT operations. - Proactive model monitoring and adjustment

By continuously monitoring the model's performance, teams can detect model drift and ensure it adapts to new data or changes in the operating environment. In IT contexts like predictive maintenance, this helps the model stay up to date, avoiding missed patterns in system failures that could otherwise lead to operational inefficiencies.

What should you look for in an LLM observability solution?

Choosing the right LLM observability platform requires evaluating key features that support your Al and business needs. Here's what to look for:

- Real-time monitoring: Continuous tracking of Al performance and response quality.

- Comprehensive logging and tracing: Ability to trace outputs back to inputs and manage causation.

- Bias detection and mitigation tools: Features to detect, audit, and correct biases.

- Security and compliance enforcement: Built-in tools to meet data privacy regulations and corporate governance policies.

- Cost and efficiency analytics: Insights into token usage, model drift, and infrastructure costs.

- Drift detection: Identify changes in model behavior over time to prevent performance degradation.

- Feedback integration: Incorporate human feedback loops to continuously refine Al outputs.

- Anomaly detection: Automatically flag unusual Al behavior that could indicate security threats or systemic failures.

- Scalability and adaptability: Ensure the observability solution can grow with your Al infrastructure.

- Multi-model support: Capability to monitor multiple Al models across different use cases and environments.

LLM observability is critical for ensuring the performance, security, and cost-efficiency of Al-powered applications. By monitoring and analyzing key metrics, organizations can build trustworthy, high-performing Al solutions while mitigating risks. Investing in a robust LLM observability solution helps you stay compliant, optimize costs, and deliver reliable Al-driven experiences at scale.