A CIO’s guide to service mesh: What it is, benefits, and best practices

Summary

This article is a comprehensive guide for CIOs exploring service mesh architectures in cloud-native environments. It demystifies what a service mesh is, how it functions through sidecar proxies and control/data planes, and why it's a critical infrastructure layer for managing microservices communication. The piece explains its core components, compares it to API gateways, and outlines key benefits such as observability, security, resiliency, and deployment control. It also addresses common implementation challenges—like performance overhead and operational complexity—and advocates for a phased, learning-focused adoption strategy to ensure enterprise-wide success.

In the era of digital transformation, microservices have become the standard for building scalable, modular applications. But as systems get more distributed, the complexity of managing service-to-service communication skyrockets. That’s where service mesh comes into play.

If you’re a CIO navigating the cloud-native landscape, understanding what a service mesh does and how it fits into your IT architecture is no longer optional. It’s essential. Let’s break it down in plain terms.

What is a service mesh?

At its core, a service mesh is an infrastructure layer that handles how microservices communicate with each other. It provides tools for traffic management, observability, security, and reliability without requiring changes to the services themselves.

Think of it as a kind of "smart network overlay" that makes your microservices environment more predictable, secure, and easier to manage. Rather than having developers build logic for retries, encryption, or load balancing into every microservice, a service mesh handles it all externally through a layer of proxies.

How does service mesh work?

Service mesh pairs each micro service with a sidecar proxy

In a service mesh architecture, each service instance is paired with a sidecar proxy that runs alongside it, typically within the same pod, container, or virtual machine. This proxy intercepts all inbound and outbound network traffic without requiring any changes to the application code. As a result, the application can stay focused on its core business logic, while the proxy handles important operational tasks behind the scenes.

The implementation typically works as follows:

- The sidecar proxy shares the network namespace with the service it supports.

- All network traffic is automatically redirected through the proxy, which communicates with the service via localhost.

- The proxy handles service-to-service communication features including load balancing, encryption, retry logic, timeouts, and observability.

Control plane and data plane enable micro service communication in a service mesh architecture

Data plane and service mesh traffic handling

The data plane is the layer that handles all network traffic within the service mesh. The sidecar proxies intercept every request and response, both inbound and outbound of the service, and perform critical functions such as:

- Service discovery

- Load balancing

- Request routing

- Traffic encryption and authentication

- Metrics collection and logging

Since the data plane is responsible for processing live traffic, it is optimized for performance and scales automatically with traffic volume.

Control plane guides the data plane with configurations

The control plane acts as the brain of the service mesh. It manages the overall behavior of the data plane by providing configuration and policy management. While it doesn’t handle live traffic itself, it plays a critical role by:

- Defining and distributing routing rules

- Managing security policies (e.g., mTLS, access control)

- Configuring observability features like tracing and logging

- Coordinating all sidecar proxies to behave as a unified system

Administrators interact with the control plane using command-line tools, APIs, or graphical interfaces to define how traffic should flow across the mesh. Once configured, the control plane pushes these policies out to the proxies in the data plane.

Control plane vs. data plane

| Aspect | Data plane | Control plane |

|---|---|---|

| Function | Handles actual network traffic between services | Manages configuration, policies, and coordination across the mesh |

| Components | Sidecar proxies deployed with each service | Centralized management server or system |

| Traffic handling | Yes — intercepts and processes every request/response | No — does not touch live traffic |

| Responsibilities | Service discovery, load balancing, routing, encryption, authentication, logging | Defining routing rules, security policies, observability settings |

| Scalability focus | Scales with traffic volume | Scales with the number of services and complexity of configuration |

| Performance impact | High-performance and optimized for throughput | Minimal performance impact; operates out-of-band |

| Admin interaction | No direct interaction — operates autonomously based on control plane configs | Yes — accessed via UI, CLI, or API |

| Update frequency | Frequently updated based on traffic and routing needs | Less frequent updates, typically when configurations or policies change |

| Goal | Efficient traffic handling and observability | Centralized control, consistency, and policy enforcement |

How it all fits with microservices

In a microservices setup, dozens of services are constantly talking to one another. Without a service mesh, each team might handle things like retries, timeouts, or encryption differently, creating a mess of inconsistent practices and hard-to-troubleshoot issues.

With a mesh in place:

- Services stay focused on business logic

- Communication concerns are handled uniformly

- Operations and security teams gain centralized control

It decouples communication logic from application code and puts it into the infrastructure where it can be managed at scale.

Service mesh vs API gateway

They sound similar, but they serve different purposes. Here's how:

| Feature | Service Mesh | API Gateway |

|---|---|---|

| Scope | Internal (east-west) traffic | External (north-south) traffic |

| Role | Manages inter-service communication | Manages client-to-service communication |

| Components | Sidecar proxies and control plane | Gateway proxy, routing rules |

| Use case | Secure, observable microservice communication | Authentication, rate-limiting, request routing |

In most modern architectures, you need both. The API gateway handles ingress, while the service mesh manages internal service communication.

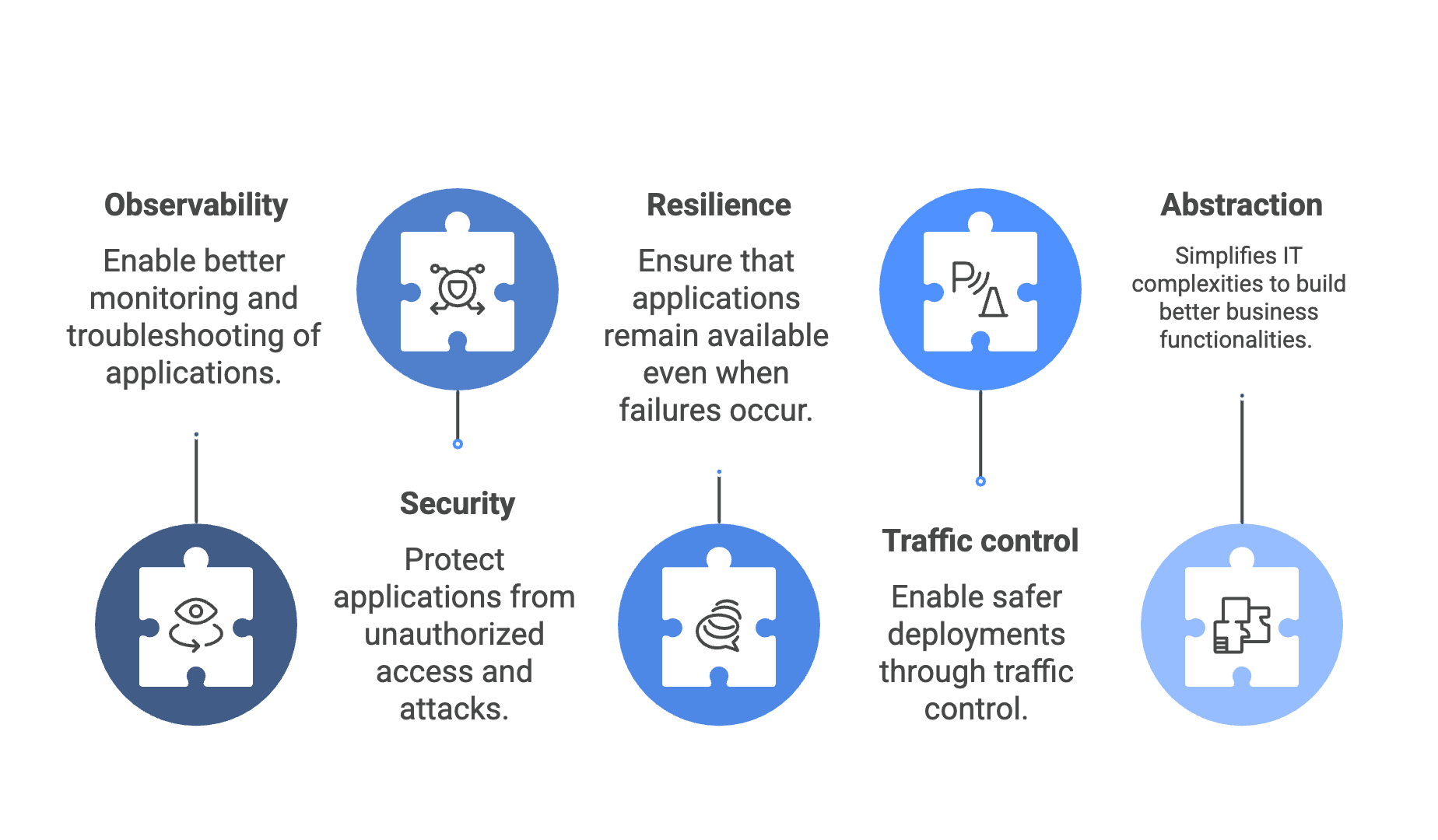

Benefits of using a service mesh architecture in enterprise IT

Adopting a service mesh brings clear advantages for managing modern, cloud-native applications. Especially from a CIO’s perspective, it enables better governance, enhanced reliability, and greater development agility across distributed systems. Here are some of the most impactful benefits, along with real-world use cases:

1. Observability is built into your IT architecture from the start

A service mesh automatically collects telemetry data, such as metrics, logs, and distributed traces—for every service interaction. This data is gathered at the proxy level, so developers don’t need to manually instrument their code. It provides deep visibility into system behavior and is crucial for monitoring, debugging, and performance tuning.

Use case: An online marketplace can use built-in tracing to identify bottlenecks in the checkout process. With detailed request-level visibility, the operations team quickly traces the issue to a latency spike in the payment service and resolves it before customer experience is impacted.

2. Security is enforced at the communication layer

Service meshes enable encrypted service-to-service communication via mutual TLS (mTLS), and offer fine-grained policy controls such as service-level access restrictions. These capabilities help meet regulatory and compliance requirements by enforcing consistent security policies across environments.

Use case: A healthcare provider can use service mesh features to ensure that only authorized services can access patient data. All traffic between internal APIs is encrypted by default, helping the organization stay compliant with HIPAA and internal audit policies.

3. Resilience is built into the infrastructure

The mesh provides reliability patterns like automatic retries, circuit breakers, failover handling, and timeouts - all of which can be configured independently of the application code. These features help ensure service availability, even when some components fail or degrade.

Use case: A travel booking platform can use the service mesh’s circuit breaker and failover logic to automatically route around a failing third-party hotel booking service, maintaining uptime and preventing a poor user experience during external outages.

4. Traffic control enables safer deployments

Service meshes allow for intelligent routing and traffic shaping, which is especially valuable during deployments. Teams can gradually shift traffic to new versions using canary releases or perform A/B testing while monitoring system performance in real time.

Use case: A fintech startup can test a new fraud detection algorithm by routing only 5% of traffic to the updated service. With metrics and error tracking in place, they can safely evaluate the results and expand rollout gradually without touching production code or infrastructure.

5. Abstraction makes building business functionality, easy

By abstracting the complexity of inter-service communication, the service mesh allows developers to focus on building business functionality. They no longer need to manage retries, encryption, or load balancing within their services—all of that is handled consistently by the mesh.

Use case: In a large enterprise with multiple teams, developers can deploy new services independently without worrying about implementing common networking concerns. The platform team manages policies and observability centrally through the mesh, reducing duplication and increasing developer productivity.

Challenges in service mesh implementation

While a service mesh offers significant benefits, it also introduces new layers of complexity. Like any powerful tool, successful adoption requires careful planning and a realistic understanding of the trade-offs involved. Here are some of the key challenges organizations often face:

Operational complexity can grow quickly

Running a service mesh across multiple clusters, environments, or cloud providers adds operational overhead. Managing configurations, upgrades, and policy consistency across diverse deployments can become a significant responsibility for platform and DevOps teams.

Consideration: Organizations with large-scale infrastructure should invest in automation and centralized control tools to streamline mesh operations and reduce manual effort.

There is a measurable performance overhead

Because sidecar proxies are injected alongside each service instance, they consume additional CPU and memory resources. While this is often acceptable for smaller deployments, the impact can be substantial in high-density environments or cost-sensitive workloads.

Consideration: Benchmark the resource overhead in staging environments and factor this into capacity planning, especially when deploying to production at scale.

The learning curve can slow down adoption

Service mesh introduces a new set of concepts—like traffic shifting, policy enforcement, mTLS, and control/data plane separation—that require teams to build both conceptual and operational knowledge. This can be a barrier, particularly for teams new to cloud-native patterns.

Consideration: Invest in training and onboarding programs for developers and operators. Start with low-risk workloads before scaling mesh adoption across the organization.

Integration with existing tooling isn't always seamless

Aligning service mesh telemetry, logging, and security features with your existing observability stack or compliance frameworks may take effort. Tools like Prometheus, Grafana, or SIEM platforms may need to be reconfigured to handle mesh-native data formats or APIs.

Consideration: Plan integration early in the adoption journey to avoid duplication or visibility gaps across systems.

Start small, learn fast

The key to avoiding common pitfalls is to approach service mesh adoption incrementally. Rather than deploying it organization-wide from the start, begin with a small number of services or a single environment. Use this phase to validate assumptions, build internal expertise, and refine your tooling and processes.

Looking to implement service meshes?

Getting started with a service mesh is best approached in phases.

- First, assess whether your architecture truly needs one. Generally, it’s most beneficial for systems with 20 or more microservices facing communication or security challenges.

- Next, choose a mesh that fits your environment and team expertise. Begin by enabling observability features like metrics and tracing to gain visibility without changing traffic behavior.

- Once that’s in place, gradually introduce security policies and traffic controls.

- Finally, focus on building operational maturity through training, ownership, and monitoring to ensure long-term success.

Start small. Experiment. Learn. And evolve your mesh strategy alongside your microservices maturity. Done right, it becomes an invisible yet indispensable part of your modern IT stack.