Chapter 4:

Enhancing cybersecurity in

the IT environment

Preventing malware attacks

Applying AI to malware detection helps our IT team secure the hardware components from getting hacked or corrupted in our IT environment. We require comprehensive analysis of files, images, and other downloadable data to check for the presence of a malware.

Our AI system monitors every attachment that enters our server. Further, it traces every single code in the incoming files in search of peculiar scripts. The attachments or files shared over emails are mainly of the PDF and exe kinds. Our system analyses such files for malicious data by extracting and analyzing their features. Depending on the context, our system either extracts the features before processing the file or executes the process in a protected environment.

For example, let's say a third-party vendor emails the list of their services and price catalog as a PDF file. Our system extracts the features from this file including the time created, author, and summary to ensure the file can be opened safely.

Our AI system can analyze a file for malware in two stages:

Static analysis

First, our system gathers information regarding malware without viewing the code. In this stage, our system essentially differentiates what a normal file is not supposed to do, and what a malware file likely does, such as generating repeated activities, and unreadable outgoing URLs. Extracting and analyzing metadata, such as file name, type, and size can yield clues about the nature of the malware. MD5 checksums, an attribute to verify the integrity of a file, or hashes can be compared with our database to see if the malware has been previously spotted.

Further, our system analyses the code by dissecting it into different components. Even during this process, the code doesn't have to be executed. A file’s headers, functions, and strings provides important details on what the file was meant to do. If there is malicious intent there, our system captures it and warns the user.

However, malware can go undetected with static analysis if they are sophisticated enough. For example, let's say the PDF file from the third party vendor generates a string that then downloads a malicious file based upon the dynamic string. Since static analysis doesn't run the code, this PDF file could go undetected and may provide access to the hacker posing as a third-party vendor. In situations like these, we need dynamic analysis.

Dynamic analysis

During this stage, our system executes the files in a protected black box environment. In this stage, URL calls and domains are inspected through our system that identifies the malware files. The black box environment is a virtual one that is isolated from the rest of the network and can execute the files without causing potential damage to the production environment. The black box can be rolled back to its original state after analysis, without permanent damage. In our previous example, the dynamic string will now be revealed in the black box environment and our system will alert the user immediately about the malware.

Our AI system monitors the black box environment to see how the malware modifies it. Technical indicators appear to suggest that the malware is modifying registry keys, IP addresses, domain names, file path locations or even communicating with an external hacker.

Analyzing user and entity behavior

ManageEngine has multiple types of entities associated with it:

User machines

app servers

database servers

FTP servers

Printers

and more

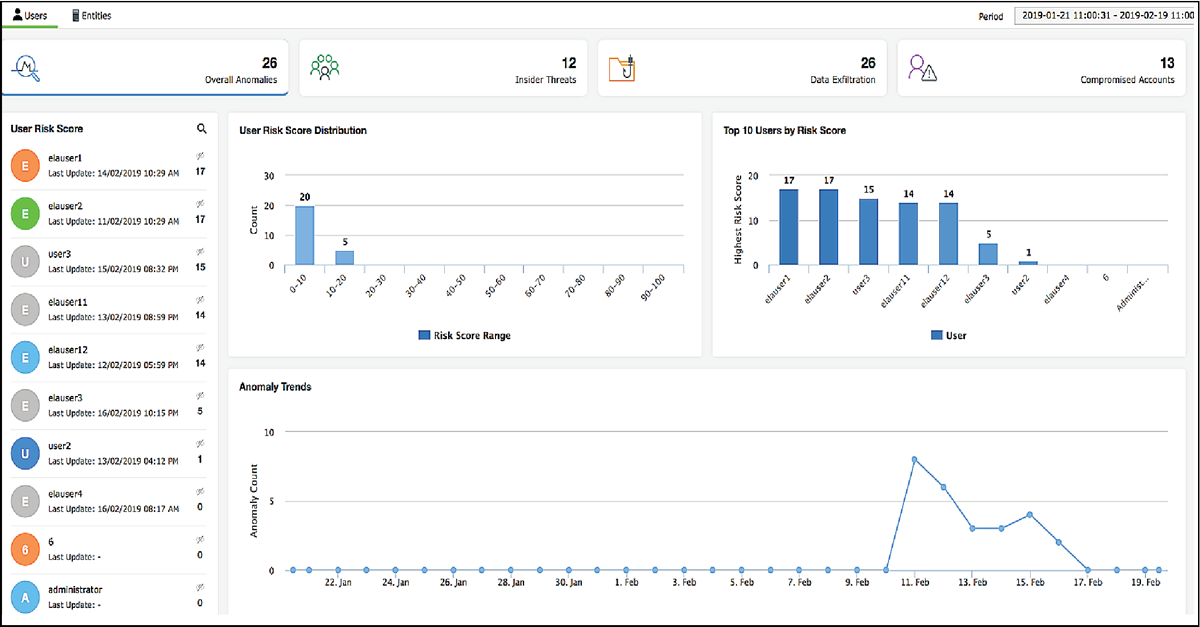

Each of these entities has a unique behavior. We see this unique behavior as the "normal" one for that entity. Any deviation from this behavior could be cause for concern, and pose security threats to the IT environment. Likewise, users also exhibit their own behavior. Our AI system monitors aspects of this behavior to detect account compromise, insider threats, and data exfiltration. Figure 7, below, displays how our AI system provides insights on user behavior in an IT environment:

Figure 7: User entity and behavior analysis using AI

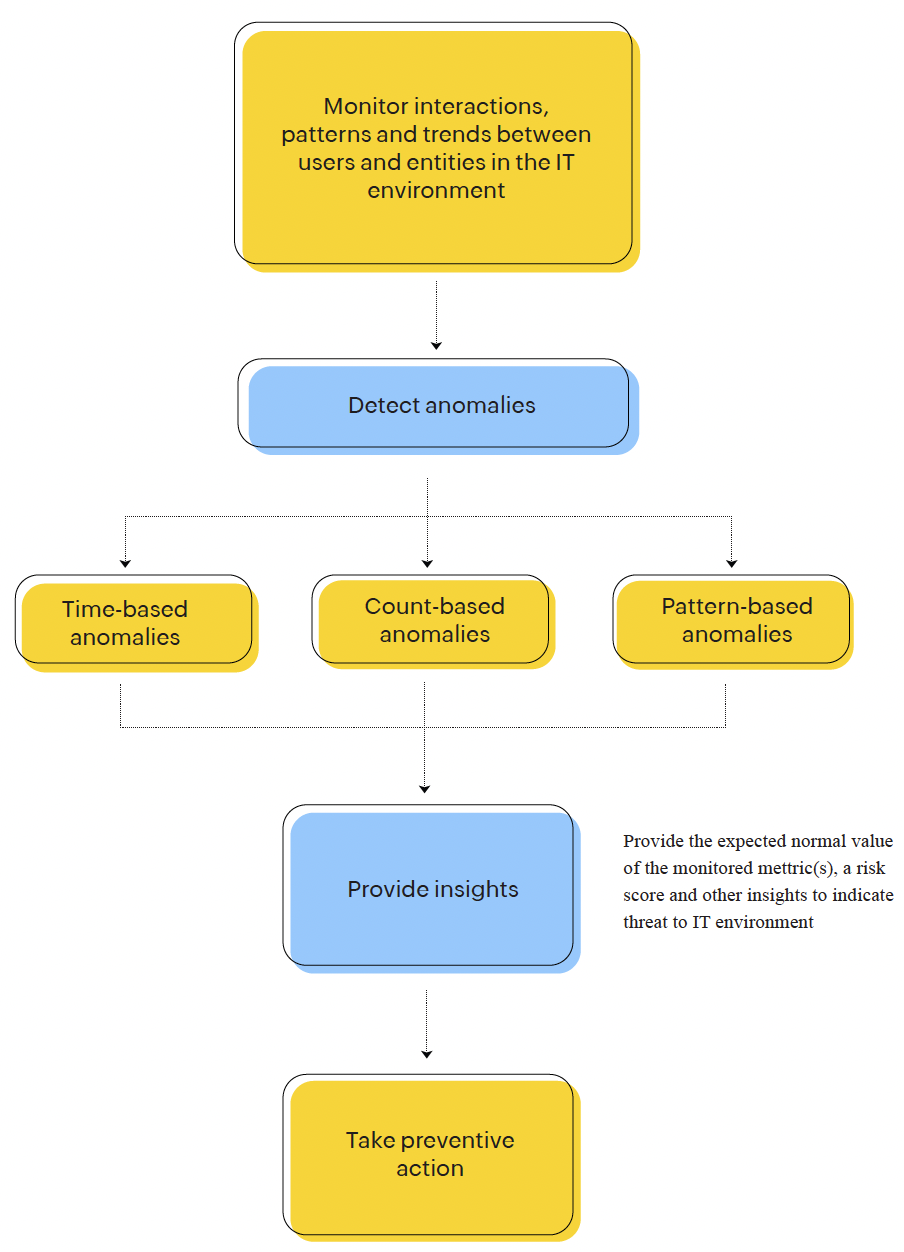

We call the analysis of "normal" behavior and deviations from it user entity and behavior analysis (UEBA). This involves monitoring different aspects of users and entities to build a baseline understanding of the normal state of the IT environment. Our AI system evaluates future behavior upon this base, and triggers anomalies in case of any deviation. Here's how our AI system establishes preventive actions based on user and entity behavior:

Figure 8: Process flow diagram for user entity and behavior analysis

Behavioral patterns

Our AI system monitors user-entity interactions, patterns, and trends to identify what the normal pattern is. The anomalies are detected based on this normal behavior.

Behavioral patterns identified are classified into one of the following three:

1. Frequent patterns:

Regular workflows followed by the user everyday, frequently occurring patterns are considered normal.

Example: Immediately after logging in, the user checks his emails, messages, and then moves on to the other tasks.

2. Rare patterns:

These are patterns found at regular intervals of time. They do not occur everyday but do occur from time to time.

Example: At the end of every month, the user logs in, checks emails and messages, and cleans the cache stored before moving onto the tasks.

3. Unseen patterns:

These are patterns that the AI system has never come across before. These patterns are red flags and are of high priority. Our system immediately sends an alert to the user or the security admin.

Example: The user, after logging in directly accesses a secure region of the IT environment, instead of checking emails.

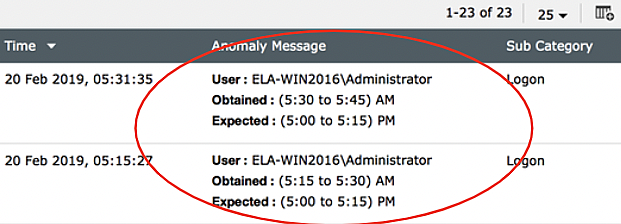

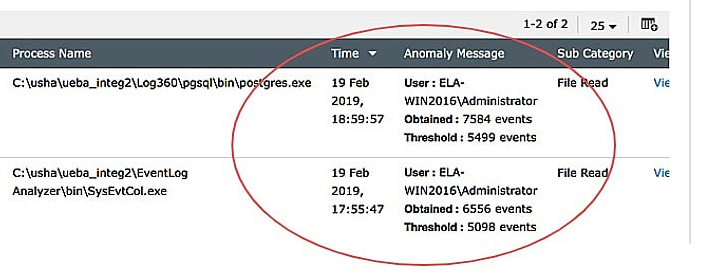

Once the user or entity deviates from their normal behavior, our system detects one of these three types of anomalies:

Challenge |

Type of anomaly |

Solution |

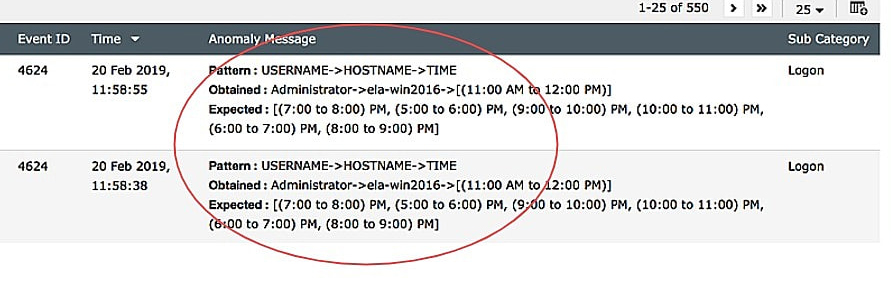

Time-based anomalies |

Anomaly detected based on the logon time of a user. These users have deviated from their normal behavior by logging on later or earlier than expected by the AI system. The message will now be triggered to IT administrators, who will take appropriate action. |

|

Count-based anomalies |

Anomaly detected based on how many times a particular user has accessed an object (in this case, a file). |

|

Pattern-based anomalies |

Anomaly detected based on a pattern of logon times by a user. The user has deviated logon and logoff patterns as depicted. |

|

These anomalies can indicate an insider threat, account compromise, or data exfiltration based on the context. Our IT admins get to customize what events are fed into the AI system so that they have complete control over the anomalies they detect. Our AI system automatically adapts to changing data patterns without intervention, and flags anomalies automatically based on data patterns. As our AI system doesn't have to be supervised, it allows our IT admins to focus on taking preventive action as quickly as needed.

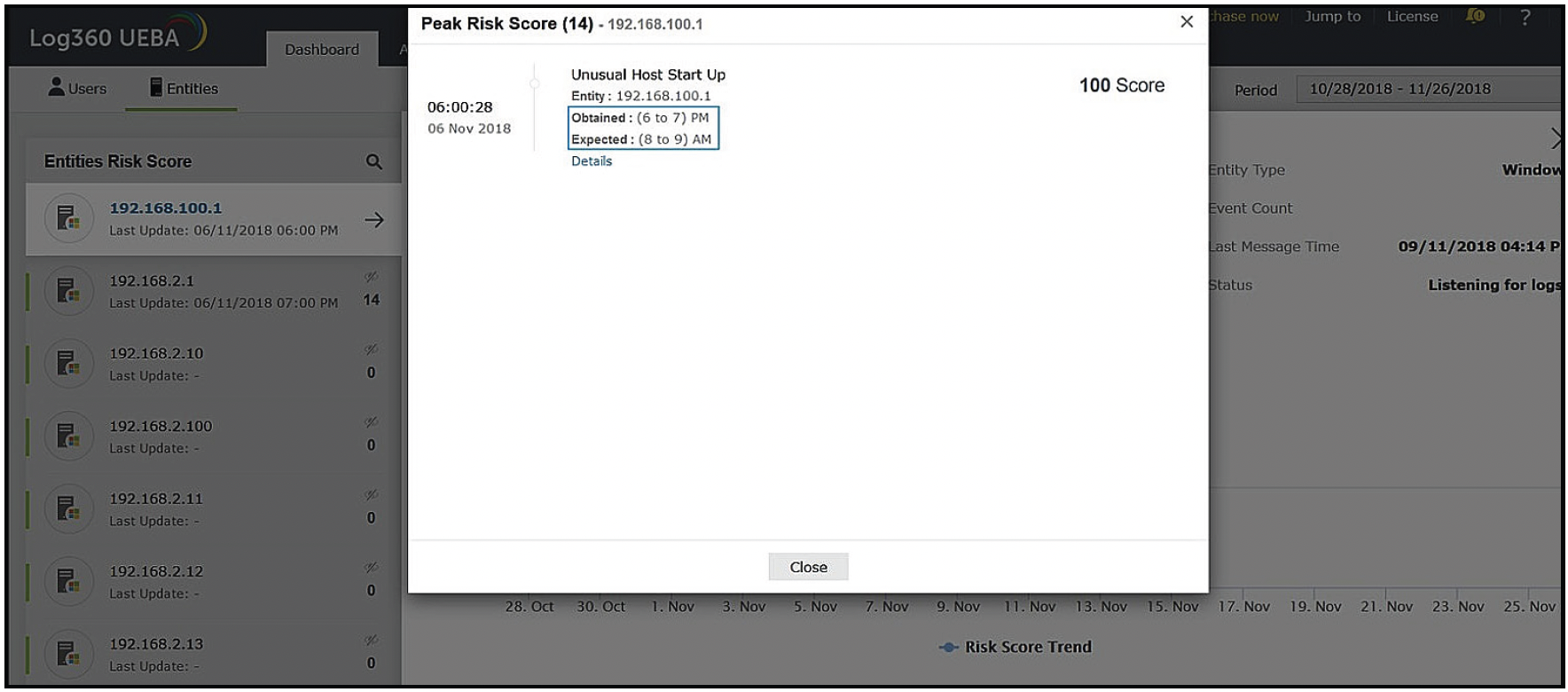

Furthermore, our AI system provides explanations on why a particular activity is deemed anomalous. This helps IT teams validate the anomalies and make informed decisions. Therefore, be it user behavior or entity, each anomaly detected is validated with explanations. Figure 9, below, is an example of how our AI system represents anomalous entities' behaviors with explanations:

Figure 9: Risk score and explanations of anomalous entities