- HOME

- More

- Security Analytics

- Addressing the dark side of AI with analytics

Addressing the dark side of AI with analytics

- Last Updated: December 23, 2025

- 294 Views

- 16 Min Read

The benefits of AI are undeniable—faster decisions, workflow automation, and new ways to run IT. However, when AI adoption takes off enterprise-wide, the challenges—including data leakage, compliance gaps, integration challenges and infrastructure shocks—grow just as fast.

IT leaders like you are at cross-roads—choosing between innovation and security.

But should there be a trade-off?

You can overcome these challenges and lead safer AI adoption by leveraging unified IT analytics that bring visibility into AI usage, threats, compliance gaps, and seamlessly embedding AI assistants into existing workflows. Let's learn how with real world examples.

Challenges that emerge with increasing AI adoption in IT

As teams rush to embed AI tools, like generative models to AI agents into workflows, new risks surface in ways most IT environments aren’t prepared for.

Here are the five most pressing challenges IT teams must address for secure and sustainable AI adoption.

1. Increased cyberattacks and shadow AI

AI is undermining cybersecurity by opening new infiltration points. Also, attackers themselves are weaponizing AI to increase the scale and sophistication of cyberattacks. Automated spear-phishing emails and AI-generated malware are no longer experimental.

Compounding these threats is the rise of shadow AI, which are unauthorized AI tools used by employees. A study by ManageEngine found that a staggering 93% of employees admitted to inputting enterprise data into unapproved AI platforms. This uncontrolled usage not only raises the risk of accidental data leaks but also leads to severe compliance issues.

2. Regulatory compliance gaps

Rapid AI adoption makes it harder for enterprises to stay within regulatory boundaries. AI applications can process large volumes of data, store data across borders or lack audit trails which impacts the adherence to GDPR, HIPAA, and ISO 42001 compliance standards.

Without oversight, the sheer scale and pace of AI creates blind spots in how data is accessed, moved, or shared. As a result, IT leaders risk falling out of compliance, exposing enterprises to legal penalties, reputational damage, and failing compliance audits.

3. Lack of seamless AI integration with existing IT tools and workflows

A common roadblock in enterprise AI adoption is integration. Most AI assistants operate outside the IT tool stack, forcing teams to switch contexts, manually export data, or stitch together insights. This disrupts workflows and limits the AI's ability to understand the entire IT environment, making its insights generic and often unreliable.

4. Dynamic infrastructure load and computational power

AI workloads can place unexpected strain on existing IT infrastructure. GPU-intensive tasks, high compute cycles, and increased storage needs may degrade the performance of other business-critical systems if resource utilization is not balanced or tracked closely.

5. Inability to track enterprise-wide AI implementation cost and ROI

AI implementations often span multiple departments and projects, making it difficult to track the total cost of ownership. Without oversight, it is difficult for IT leaders to know how much is being invested, which projects are underperforming, and whether outcomes justify the spend. Budgets balloon quickly, with hidden costs like cloud scaling and redundant licenses draining resources.

Six ways to tackle AI challenges with IT analytics

Unified IT analytics gives IT leaders the visibility and control needed over AI adoption. Here are six proven ways you can tackle the real-world AI adoption challenges.

1. Proactive threat detection of AI-driven cyberattacks

According to the OWASP study, prompt injection ranks first among security risks for AI applications. Attackers can inject malicious prompts to override safety controls, causing AI models to leak sensitive data or perform unintended actions.

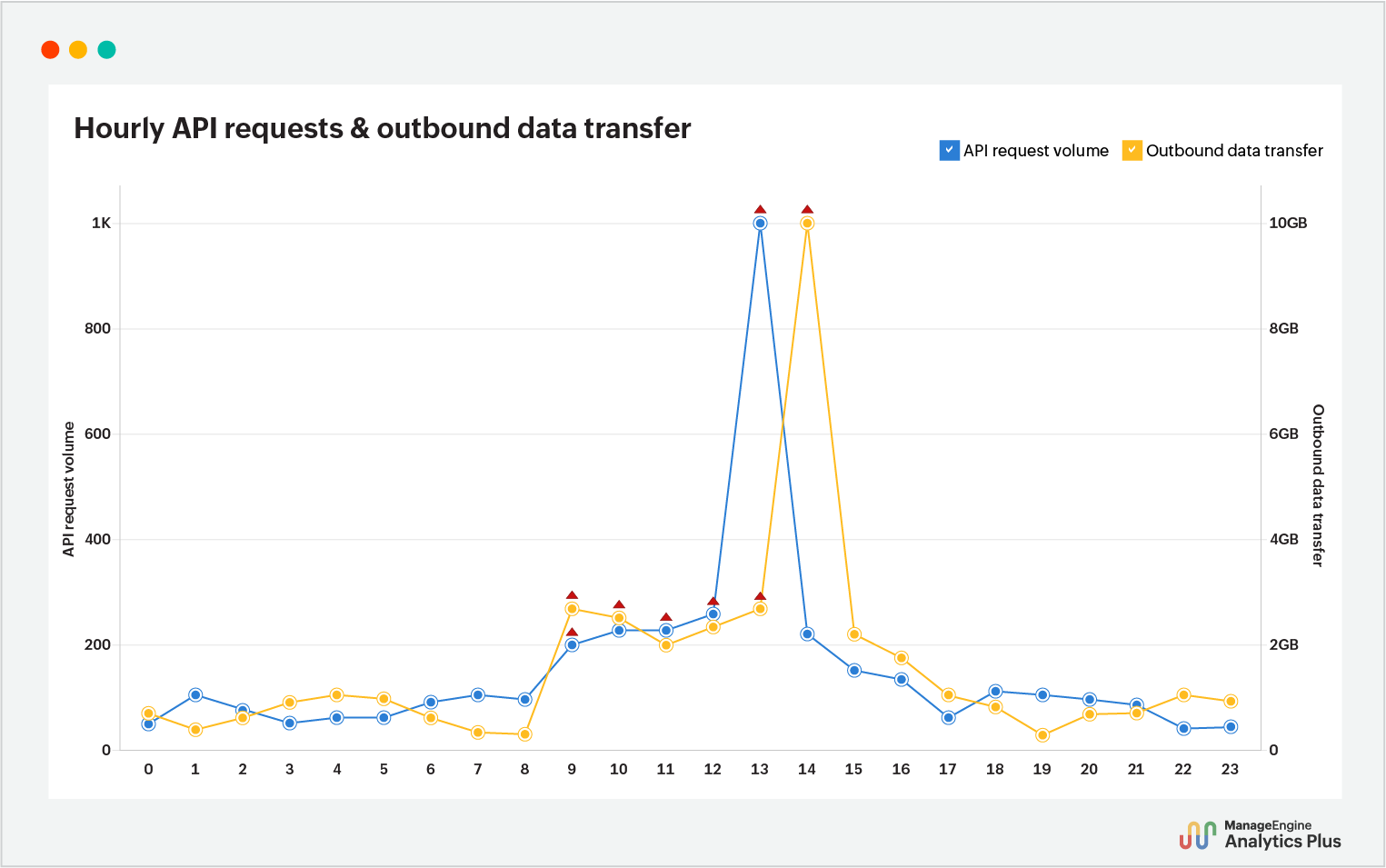

You can mitigate prompt injection risk by tracking anomalies such as unusually high or frequent API requests from AI applications, unexpected outbound data transfers, and suspicious user behavior tied to AI interactions.

Let's consider the case of a chat assistant, one of the common applications of AI. Attackers can easily enter malicious prompts through such public-facing chat interfaces, manipulating the model to perform unauthorized actions. When these are integrated with enterprise applications like CRM, such actions can trigger increased API activity.

Here's one way you can use analytics to automatically identify anomalies in metrics that signal prompt injection risks.

With automated anomaly detection, this analysis captures those subtle yet damaging trends that static thresholds often miss. This helps security teams to stop possible prompt injections before it escalates into large-scale data exfiltration and prevent the exposure of sensitive information.

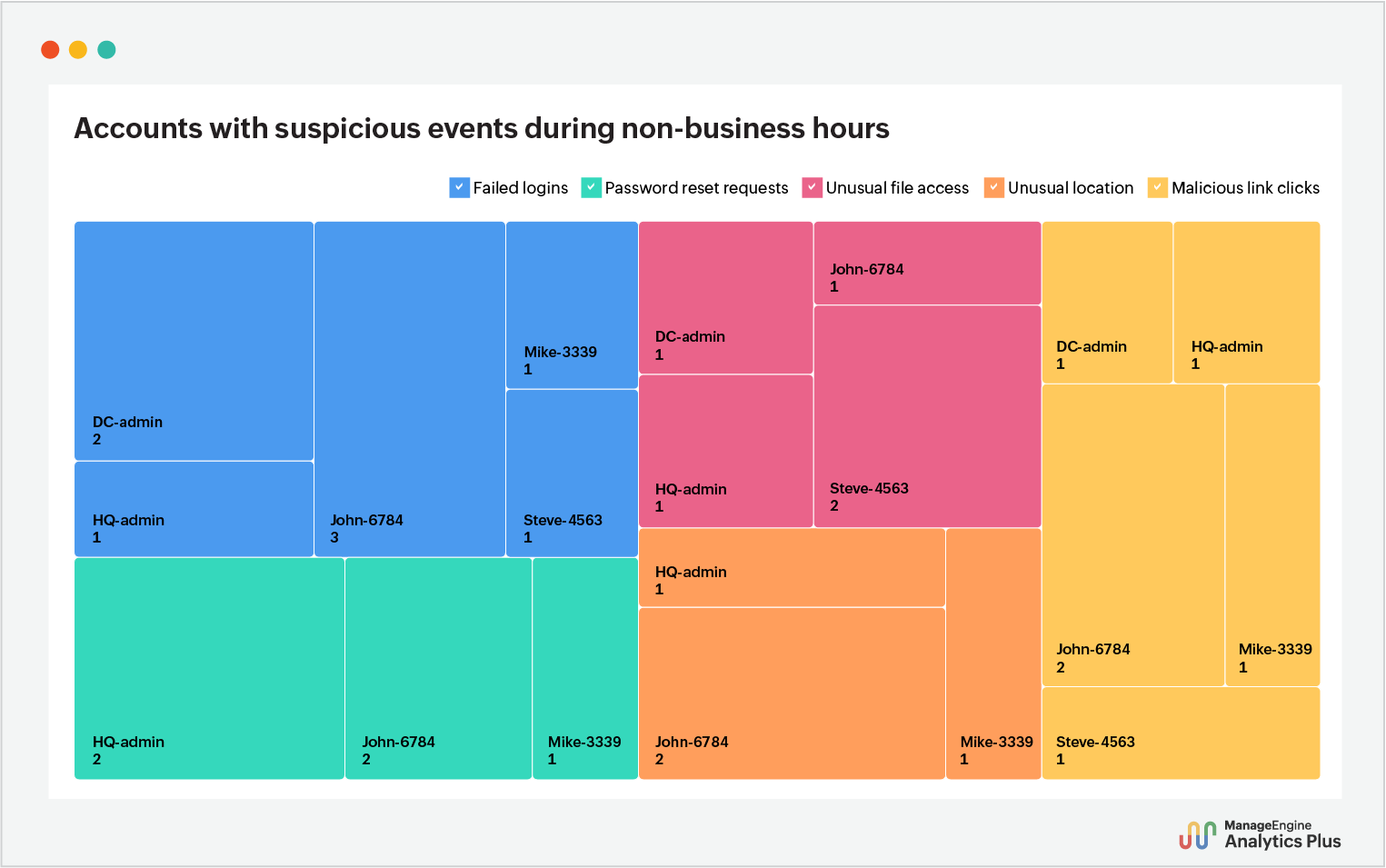

While prompt injection turns AI itself into the target, attackers also use AI to amplify existing threats in new, convincing ways. For example, AI has made phishing emails more convincing than ever. GenAI models can craft flawless, personalized messages that mimic colleagues or executives, and trick even vigilant employees. The result is a surge in credential theft, account compromise, and insider data leaks.

User behavior analysis provides a reliable way to fight back. By monitoring malicious link clicks, failed login spikes, non-business hours access, frequent password resets, or unusual file access right after suspicious emails, IT teams can spot when an account may have been compromised by phishing attacks.

This analysis helps you find the hidden traces of AI-powered phishing campaigns—showing top accounts that have clicked malicious links, faced failed logins or reset attempts, and who logged in from unusual locations. By intuitively categorizing these signals, this analysis highlights accounts most likely compromised, helping IT teams act before the attack spreads.

2. Automated shadow AI usage and data exposure risk remediation

Shadow AI can’t be governed if it isn’t visible. Tracking and mitigating enterprise-wide usage of unauthorized AI applications and their sensitive data exposure risks means stitching together insights from endpoint logs, cloud services, file tracking logs, and network signals. By the time those actions are taken, the sensitive data may already be exposed.

Also, traditional analytics burdens IT teams with countless reports, leaving them to uncover insights manually and delay critical decision-making.

Conversational AI like Ask Zia in Analytics Plus eliminates both of these bottlenecks. Instead of manually analyzing complex reports, security analysts can simply ask, Show me the trend of unauthorized applications usage and sensitive file uploads, and get instant visibility into the enterprise-wide shadow AI footprint and data exposure risks.

Beyond just delivering surface-level insights, Ask Zia uses its data-driven reasoning engine to find key drivers behind increasing shadow AI usage and recommend real-time, actionable remediation strategies to fix each of them.

These recommendations aren't just vague steps They are contextual, data-backed, and designed for immediate execution, so IT teams don’t waste time figuring out the next move.

With Ask Zia, remediation process accelerates from hours to minutes—helping IT teams contain shadow AI risks before the sensitive data exposure grows.

3. Building unified AI compliance dashboard

Regulations evolve, employees experiment with new tools, and sensitive data can slip into unauthorized systems without warning. Traditional audits or manual policy reviews are too slow to keep up with this pace. What enterprises need is continuous, real-time oversight, and that’s exactly what intelligent dashboards deliver.

A powerful AI compliance dashboard brings together metrics from across your IT environment to show whether AI usage aligns with policies and regulations. IT teams get real-time visibility into questions such as which departments are using unauthorized AI apps, what risk have been identified and how severe they are, and whether usage aligns with ISO 42001, GDPR, or HIPAA regulations.

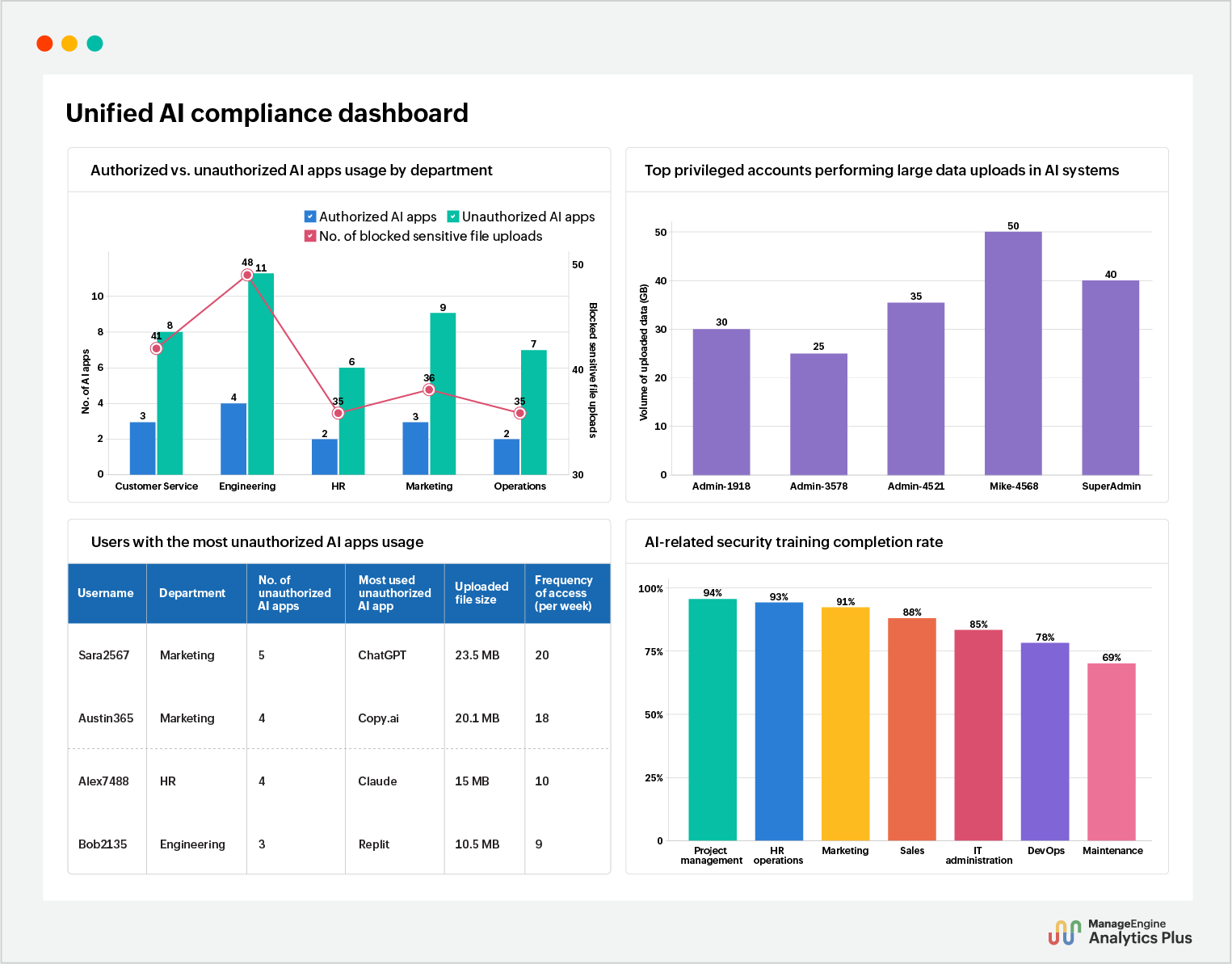

Here's a comprehensive AI compliance dashboard tracking key metrics and scenarios that affect enterprise's adherence to compliance frameworks.

This AI compliance dashboard gives leaders a clear view of how well an enterprise is responding to new risks created by rapid AI adoption. It highlights where governance measures are already working like sanctioned tools in use, blocked risky actions, and completed training while also surfacing blind spots where compliance hasn’t caught up.

With this dashboard in hand, IT leaders can tie operational data back to standards around data protection, access management, risk assessment and security awareness, and demonstrate accountability, respond to audits, and strengthen their overall compliance posture as AI adoption accelerates.

4. Embedding AI assistants across existing IT stack

For IT leaders, integrating AI into existing IT tools is rarely seamless—requiring complex setup and disrupting ongoing workflows. Also, AI assistants often sit outside the IT ecosystem, forcing teams to jump between tools and chatbots that lack environmental context.

The solution lies in AI that can be embedded directly into your IT ecosystem. Instead of acting as a disconnected chatbot, an embedded AI assistant lives inside your service desk, endpoint management, or monitoring tools—where the data already resides and where teams already work. These AI assistants deliver unmatched context across the IT stack, expediting strategic action.

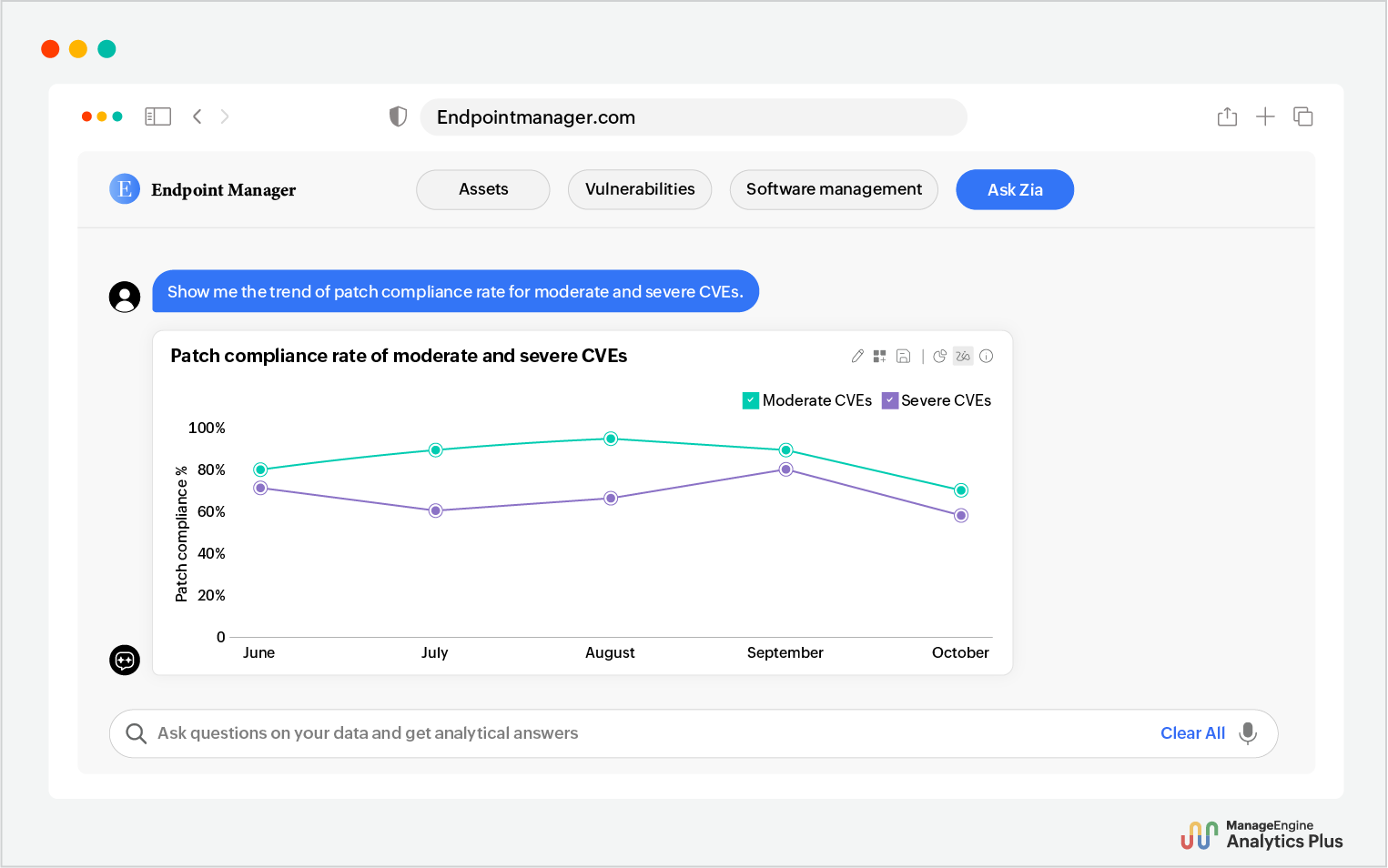

Here's how Analytics Plus' AI assistant, Ask Zia, embeds into a endpoint management platform and delivers contextual answers to your queries.

By seamlessly integrating with existing platforms, Ask Zia delivers intelligence across every step of the IT workflow—becoming an intelligence layer that enhances workflows without disrupting them.

5. AI infrastructure load forecasting and scaling

The fluctuating demand of infrastructure due to AI projects makes it difficult for IT teams to allocate resources efficiently. When resources fall short, IT leaders are forced to spin up last-minute on-demand cloud instances to keep projects moving. While effective in the short term, these emergency allocations drive up operational costs.

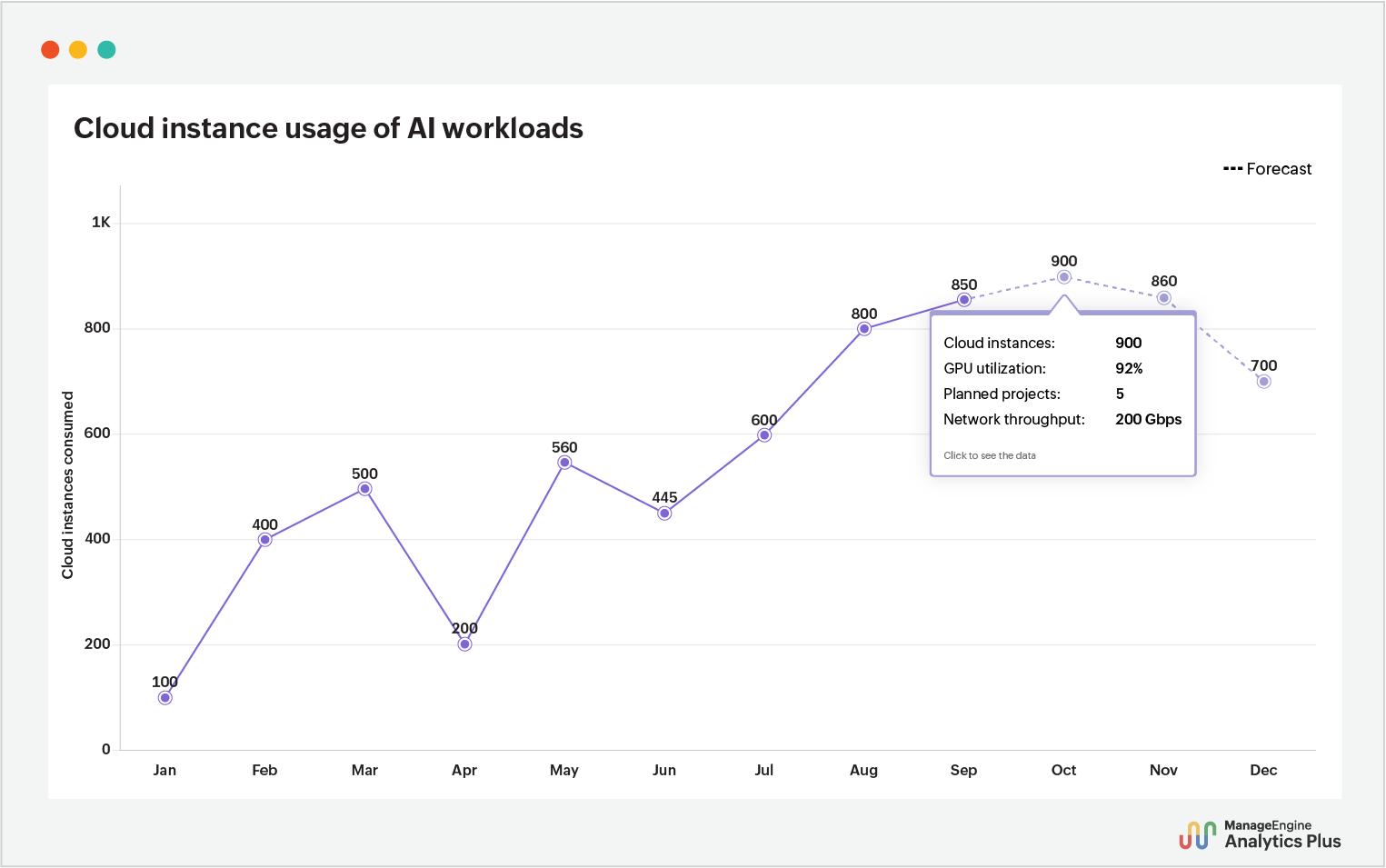

The solution is analyzing historical infrastructure usage alongside dynamic factors like peak project cycles, active AI projects, and workload patterns to generate precise predictions of resource demand.This way, IT leaders can anticipate when the demand for cloud resources will spike and allocate resources efficiently.

Traditional methods like univariate forecasting are based solely on historical trends and overlook key interdependencies, making them unreliable for dynamic and evolving IT environments, like those that are in the midst of AI adoption.

The analysis above incorporates multivariate forecasting technologies to combine historical trend with dynamic factors like GPU utilization, planned projects, network throughput and more to precisely predict resource demand that crop up due to AI adoption and expansion. With these insights in hand, IT teams can reallocate resources confidently to avoid downtime and last-minute cloud instance purchases.

6. Enterprise-wide AI budget spend and ROI analysis

When adopting emerging AI technologies, many enterprises chase hype without accurately measuring tangible business outcomes. With spending scattered across departments and limited visibility into ROI and its impact on project progress, insights remain siloed and disconnected from strategic decision-making. This leads to inefficient resource allocation, overlapping initiatives, and missed opportunities to scale successful AI projects.

Traditional reporting makes it even harder to unify fragmented insights and give IT leaders a unified view of ROI. Static reports and inconsistent metrics fail to capture the pace and complexity of evolving AI initiatives.

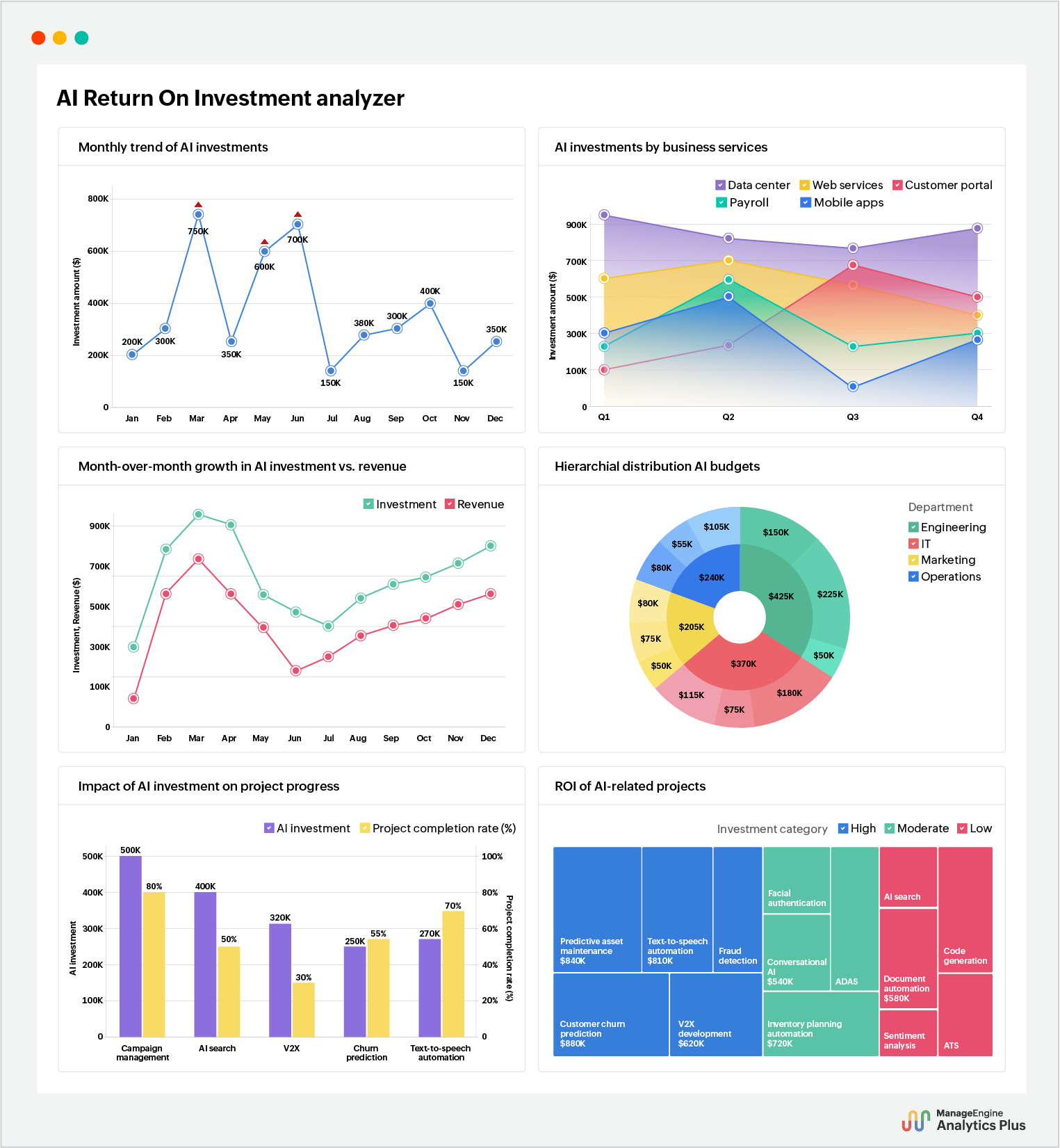

Unified IT analytics addresses these bottlenecks by offering real-time, enterprise-wide view of AI spending versus ROI, standardizing metrics across departments, and providing actionable insights to plan cost-effective AI investments.

This dashboard correlates all AI financial and performance metrics into one unified view, helping IT leaders connect investments to measurable outcomes. It eliminates siloed reporting by tracking spending trends, revenue impact, and budget distribution across departments and projects. Also, by correlating correlating AI investment with project progress and ROI, it reveals which initiatives deliver the most value.

With these actionable insights in hand, IT leaders can uncover budget inefficiencies and optimize resource allocation effortlessly.

Want to deploy and scale enterprise AI adoption for exceptional ROI? Our latest webinar gives you an actionable playbook to achieve it. Check it out now.

Ensure secure and governable AI usage with IT analytics

The common thread across AI risks, whether it’s cyberthreats, integration friction, or infrastructure demand, is that they thrive in blind spots. The strategies we've seen employ unified analytics and contextual AI assistants to eliminate those blind spots before they escalate into problems. By correlating usage patterns, flagging anomalies, and mapping activity back to compliance standards, IT leaders can turn unmanageable AI adoption into governable one. Instead of reacting to breaches, overruns, or compliance failures, you can anticipate them, act earlier, and scale AI with confidence.

The analyses included above are created using ManageEngine Analytics Plus—an AI-powered IT analytics and decision intelligence platform. Try these analyses today with a 14-day, free trial. Need to see how it fits your IT operations first? Book a personalized demo.